cuda out of memory error when GPU0 memory is fully utilized · Issue #3477 · pytorch/pytorch · GitHub

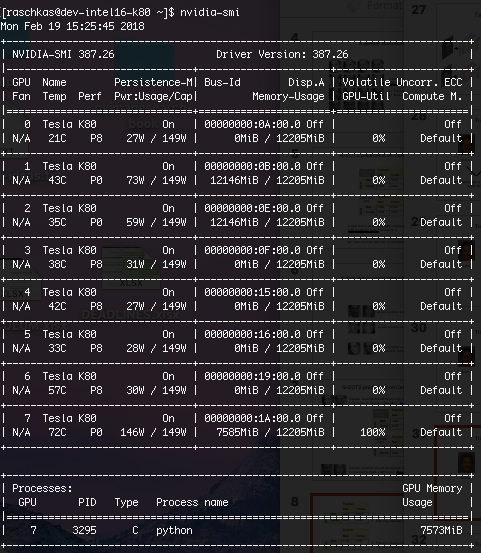

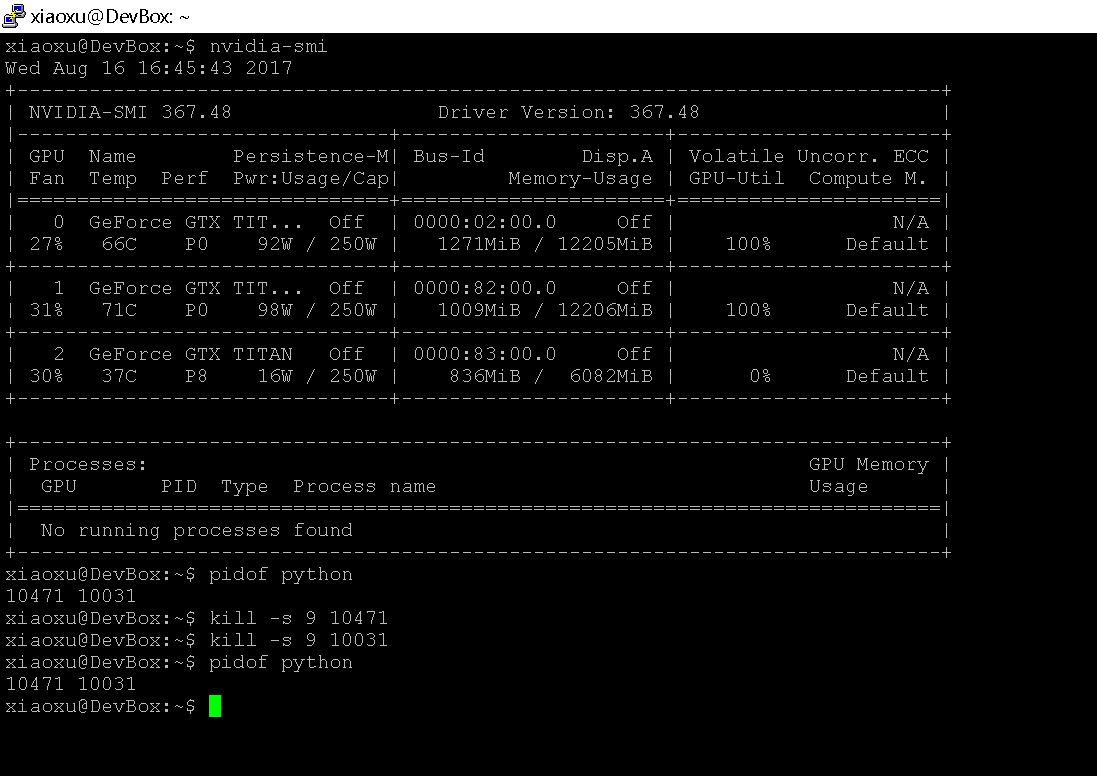

When I shut down the pytorch program by kill, I encountered the problem with the GPU - PyTorch Forums

Graphic card error(nvidia-smi prints "ERR!" on FAN and Usage)" and processes are not killed and gpu not being reset - Super User

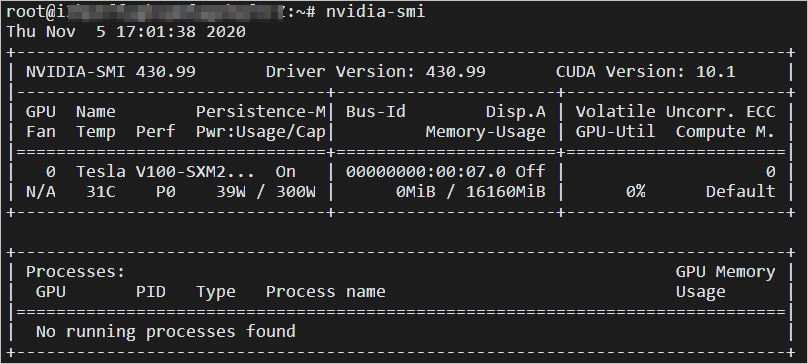

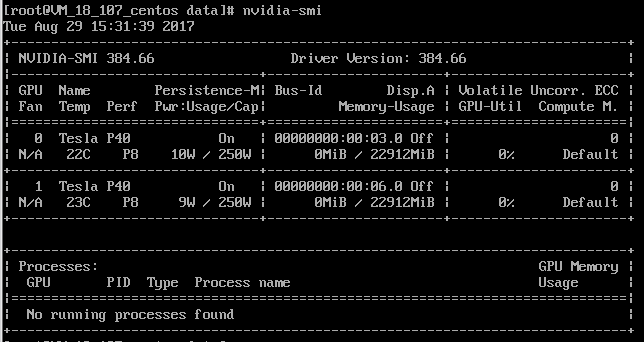

GPU memory is empty, but CUDA out of memory error occurs - CUDA Programming and Performance - NVIDIA Developer Forums

Locked core clock speed is much better than power-limit, why is not included by default? - Nvidia Cards - Forum and Knowledge Base A place where you can find answers to your

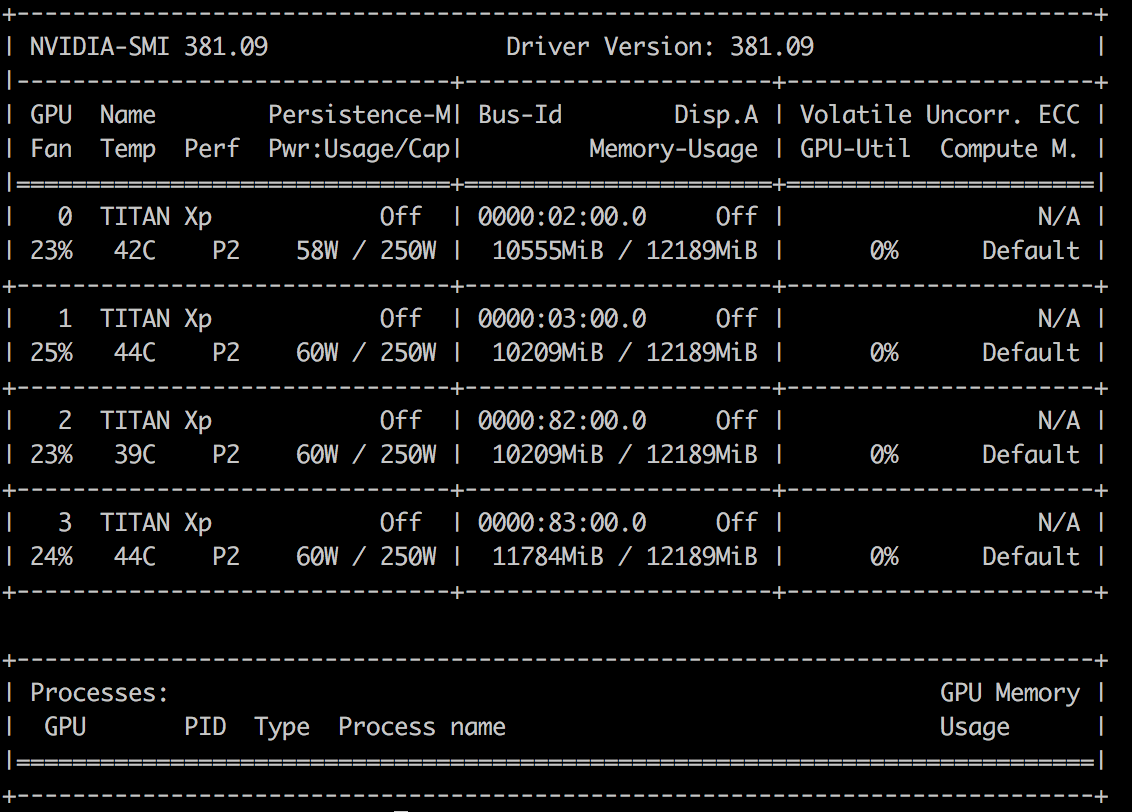

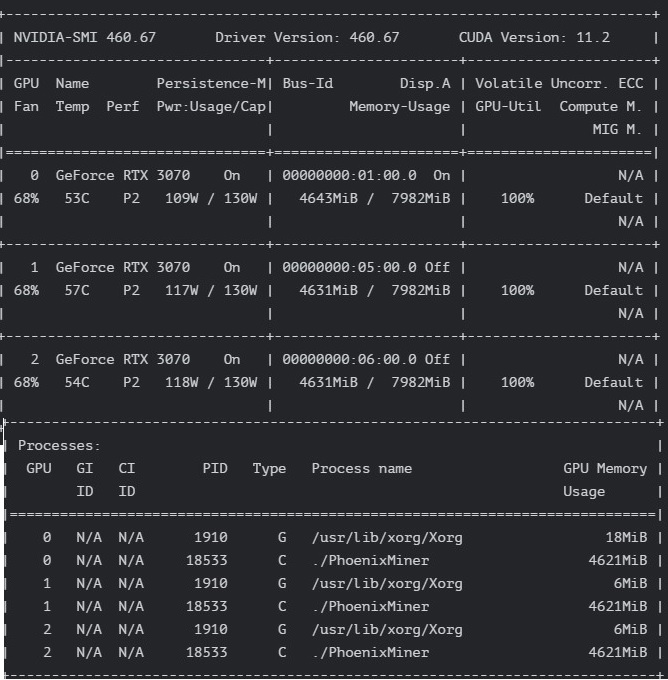

Bug: GPU resources not released appropriately when graph is reset & session is closed · Issue #18357 · tensorflow/tensorflow · GitHub

Nvidia-smi shows high global memory usage, but low in the only process - CUDA Programming and Performance - NVIDIA Developer Forums